I Built an AI Orchestrator and Ran It Overnight - Here's What Happened

Essay - Published: 2026.02.16 | 6 min read (1,622 words)

artificial-intelligence | build | create | software-engineering

DISCLOSURE: If you buy through affiliate links, I may earn a small commission. (disclosures)

I ran my AI orchestrator last night for 10 hours and it completed 15 tasks completely autonomously - triaging work items, researching context, writing PRDs, building features, and reviewing its own code.

It wasn't very fast and it wasn't particularly cheap but it worked surprisingly well and I think it tells us something about where the software engineering profession is headed.

In this post I want to share what the orchestrator is, how it works, how it performed, and why I keep coming back to the idea of a gardener when I think about the future of this profession.

15 Tasks in 10 Hours

The orchestrator completed 15 tasks across two 1-hour sessions and one 8-hour overnight session. These were small-to-medium tasks - mechanical fixes, edge cases, improvements. Nothing huge, but sizable work that were good to do eventually.

15 tasks in 10 hours isn't that fast, AIs can write code much faster than this. But each task went through my full 8 phase engineering pipeline. The idea being that it's okay to sacrifice speed for quality, considering we want this to be run ~fully autonomously. It's better for it to only complete 15 tasks over night and need no rework than 20 but we have to fix 5 of them.

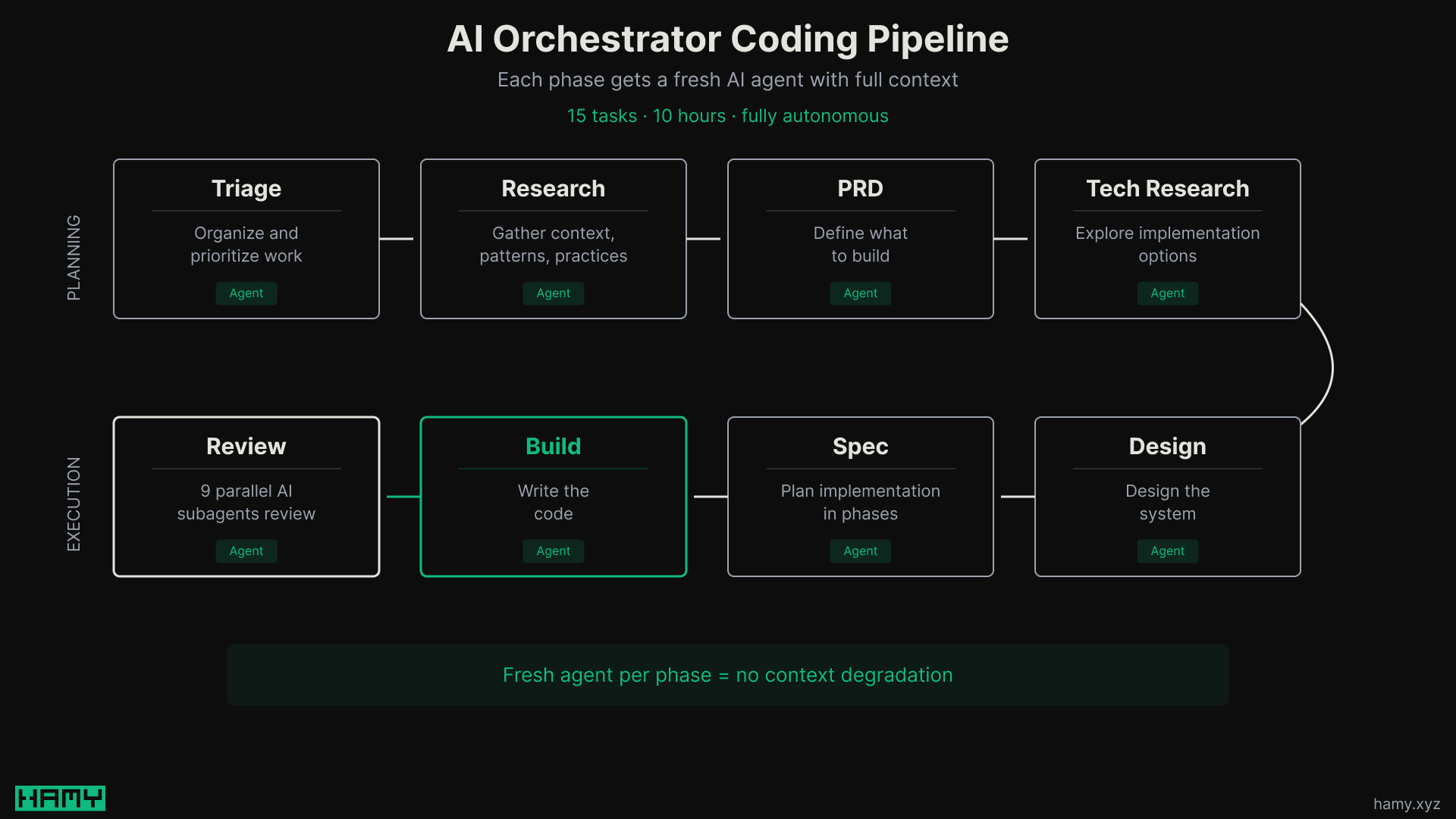

The software engineering pipeline I've landed on:

- Triage - organize and prioritize the work item

- Research - gather context

- PRD - define what to build

- Tech Research - explore implementation options

- Design - design the system

- Spec - plan the implementation in phases (see: spec driven development)

- Build - write the code

- Review - 9 parallel AI subagents review the output

This pipeline is configured how I like to write software but others are seeing similar results with different approaches with tools like beads, gas town, ccswarm, etc.

If you want to see examples of what these phases look like, I've taken a snapshot of my AI files (commands / skills) and put them in the HAMY LABS Example Repo available to HAMINIONS Members.

How the Orchestrator Works

I've been building this over the past week as part of my Recurse Center batch. One of my focuses coming in was applied AI and I've leaned into that to improve my vibe engineering workflows.

Through experimentation I found that having set phases for AI agents dramatically increases quality and consistency. Each agent only has so much context so spinning up a new one for each phase and moving them through the pipeline leads to much better outputs. This is the basis of spec-driven development and Ralph loops and I think it is a principle that's equally effective for humans as AI - I wrote about the principles behind this in 5 AI Coding Best Practices from a Google AI Director.

That idea formed the basis of the orchestrator. It's a simple state machine that:

- Keeps a backlog of work items

- Triages and prioritizes them into configured pipelines

- Moves items through pipeline phases

- Spawns an AI agent for each phase

Pipelines are fully configurable - phase definitions, parallelism, work guardrails. Each phase pipes out to a file that can be filled with instructions for your AI. The orchestrator doesn't care what AI CLI you use or what's in the instructions - it just keeps track of the core loop.

I'm currently using it with Claude Code cause that's my daily driver but it could theoretically work with codex, opencode, or anything else that takes instructions from the command line.

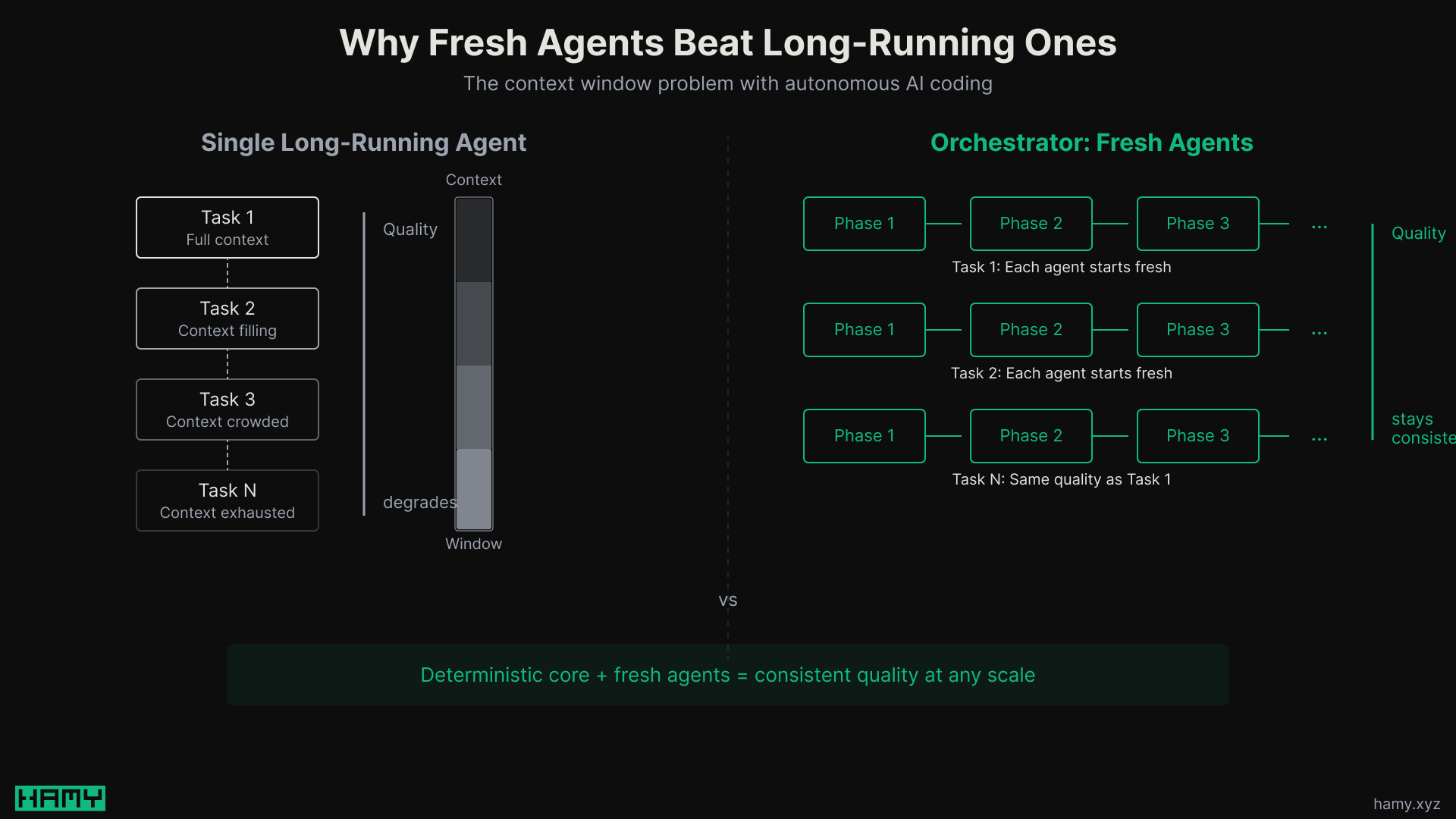

The primary thing we're trying to work around is LLM's limited context windows. The larger the context in their window, the more they hallucinate and get off track. So instead of one long-running agent that slowly deteriorates as it loses context, you have a deterministic core that spawns fresh agents for each task.

You get the determinism of traditional code with the flexibility of modern LLMs.

This is similar to the Ralph loop pattern that's been gaining traction - fresh agent per task, test-driven completion, task decomposition. The difference is that mine encodes a full software engineering lifecycle rather than just a build-and-test loop and the orchestrator can be configured to run any kind of pipeline, not just coding.

Because it's just a harness that spawns agents, it could be changed to use AI APIs directly in the future. The orchestrator is the stable part and the AI the interchangeable part which hopefully gives it some longevity as AIs and interfaces evolve in the coming years.

The Downsides of my Orchestrator

There were 3 main problems with my orchestrator.

It's slow. 8 phases per coding task is heavy, especially since we're only letting it iterate on small-medium tasks. I could speed it up by streamlining phases or using faster models, but for now I'm okay biasing toward quality. A slower agent that produces correct output is more useful than a fast agent that creates rework in this context.

It's expensive. It spent ~$90 in tokens for 10 hours of runtime based on outputs from ccusage. That's a lot for a tool and more than I'd want to pay every day.

Luckily I'm on a Claude Max subscription which is heavily subsidized by Anthropic so I didn't actually pay this out of pocket. But even at face value this is expensive for a tool but cheap compared to hiring a software engineer. It'd be hard to find a competent SWE you could task with improving your codebase at this quality level for <$10/h - for many things I wouldn't trust any other SWE to work on my project just due to the overhead involved with onboarding and managing them.

There's also a lot of room to optimize here with cheaper models, model routing (Opus for planning, Sonnet for implementation), less subagents per phase, or switching to cheaper AI CLIs entirely. So it's expensive but I'm not too worried about it right now.

Comprehension debt. A very real danger of this approach is I'm further removed from the codebase. Code is landing that I didn't write and didn't directly oversee. I'm not sure how to fully solve this except to regularly come back and get familiar with the codebase - do a task yourself, ask the AI to discuss what's changed, deep dive into a module. To be fair, this is the same as working at a company - so much code lands in a week that the system no longer looks like what you remembered. But it is jarring when it's a personal project you're building yourself.

What This Means for Software Engineering

My orchestrator isn't even that good but it works surprisingly well and I built it in a couple days. This tells me there will be way more investment here and I'd guess we'll see some really compelling orchestrators hit mainstream in the next 6-12 months.

This has me thinking about the future of the profession and how to stay relevant. I'm increasingly bullish on a few things:

- Software engineers will be more productive than ever before

- There will be more software than ever before

- Agents will write most of the code

- Humans will be valuable as managers, product managers, tech leads, architects, and staff engineers - at least short to mid term

There will still be applications for hand-written code in novel areas, deep expertise, and tricky places but for the most part AIs will do most of the typing. Others are seeing the same shift - Pragmatic Engineer says engineers become "directors," Addy Osmani says "composers" orchestrating ensembles, and Human Who Codes describes an autocomplete → conductor → orchestrator progression. For a growing number of engineers, AI is already writing 90% of their code (not necessarily the planning and review parts, but still).

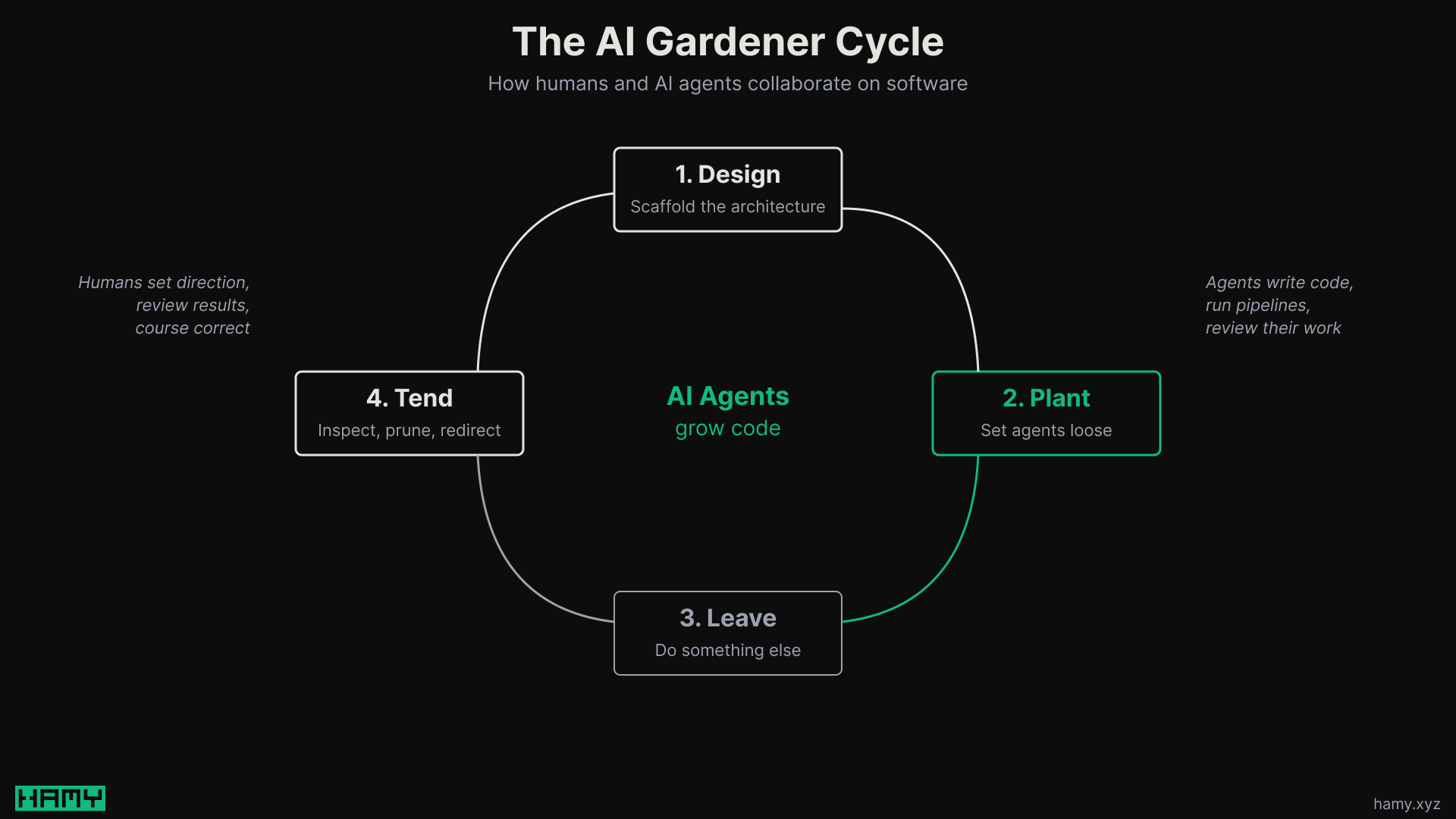

I keep coming back to the idea of a gardener for what this interaction looks like between human + AI long term:

- Design - lay down the scaffolding and architecture of what you want

- Plant - set the agents loose within that design

- Leave - walk away, do other things

- Tend - come back, inspect what grew, fix things, redirect, prune, plant more seeds

The gardening metaphor isn't new - Andy Hunt and Dave Thomas used it for software in The Pragmatic Programmer. Software is alive, evolving - not a building you construct once. What's new is that AI makes the unsupervised growth part real. You plant seeds (tasks) and they actually grow (code) while you're away.

Tending a garden is active work - pruning, directing, protecting. Looking at it another way, it's basically leadership. As you get more senior this is what you do - set the direction, have people work within it, redirect as necessary.

As I wrote in If AI can code, what will Software Engineers do?, the tools are changing but what you need to do to stay relevant remains the same: keep building, keep learning, keep sharing, keep experimenting.

Next

We're early into the AI era of software engineering. The tools are expensive and imperfect, but the pattern is clear - these things can code.

The orchestrator isn't replacing me completely but it is changing what I do. Less typing code, more designing systems and tending results. That feels like where the puck is going.

If you liked this post you might also like:

Want more like this?

The best way to support my work is to like / comment / share this post on your favorite socials.

Inbound Links

- Why I'm moving to a Linux-based, terminal-focused dev workflow - and what it looks like

- How to use Vim in Claude Code

- 2026.02 - Release Notes

- Launching Phase Golem - An AI Orchestrator for Configurable Pipelines Backed by Git

- What I Built in my First 6 Weeks at Recurse Center and What's Next (Early Return Statement)

Outbound Links

- 5 AI Coding Best Practices from a Google AI Director (That Actually Work)

- 9 Parallel AI Agents That Review My Code (Claude Code Setup)

- HAMINIONs - Exclusive access to project code and discounts

- What I Plan to Build at Recurse Center - A 12 Week Programmer's Retreat

- How I think about writing quality code fast with AI

- If AI can code, what will Software Engineers do?

- AI Is Here to Stay