Launching Phase Golem - An AI Orchestrator for Configurable Pipelines Backed by Git

Essay - Published: 2026.02.23 | 5 min read (1,443 words)

artificial-intelligence | build | create | phase-golem

DISCLOSURE: If you buy through affiliate links, I may earn a small commission. (disclosures)

I've been exploring AI engineering workflows for the past few months to see how I can improve the velocity and quality of my iterations. I currently use a workflow that looks a lot like a software engineering lifecycle - Task, PRD, Research, Design, Spec, Build, Review.

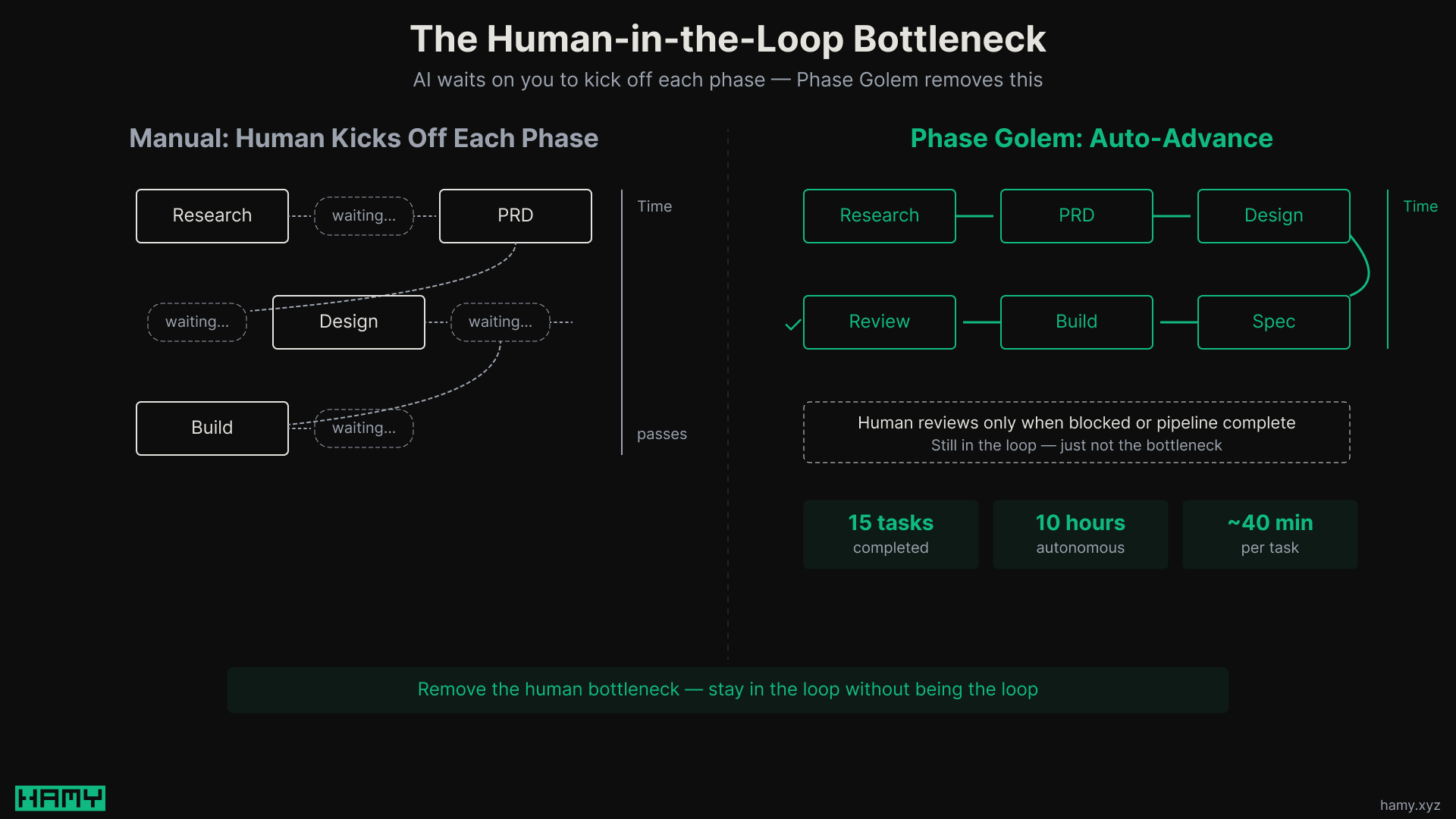

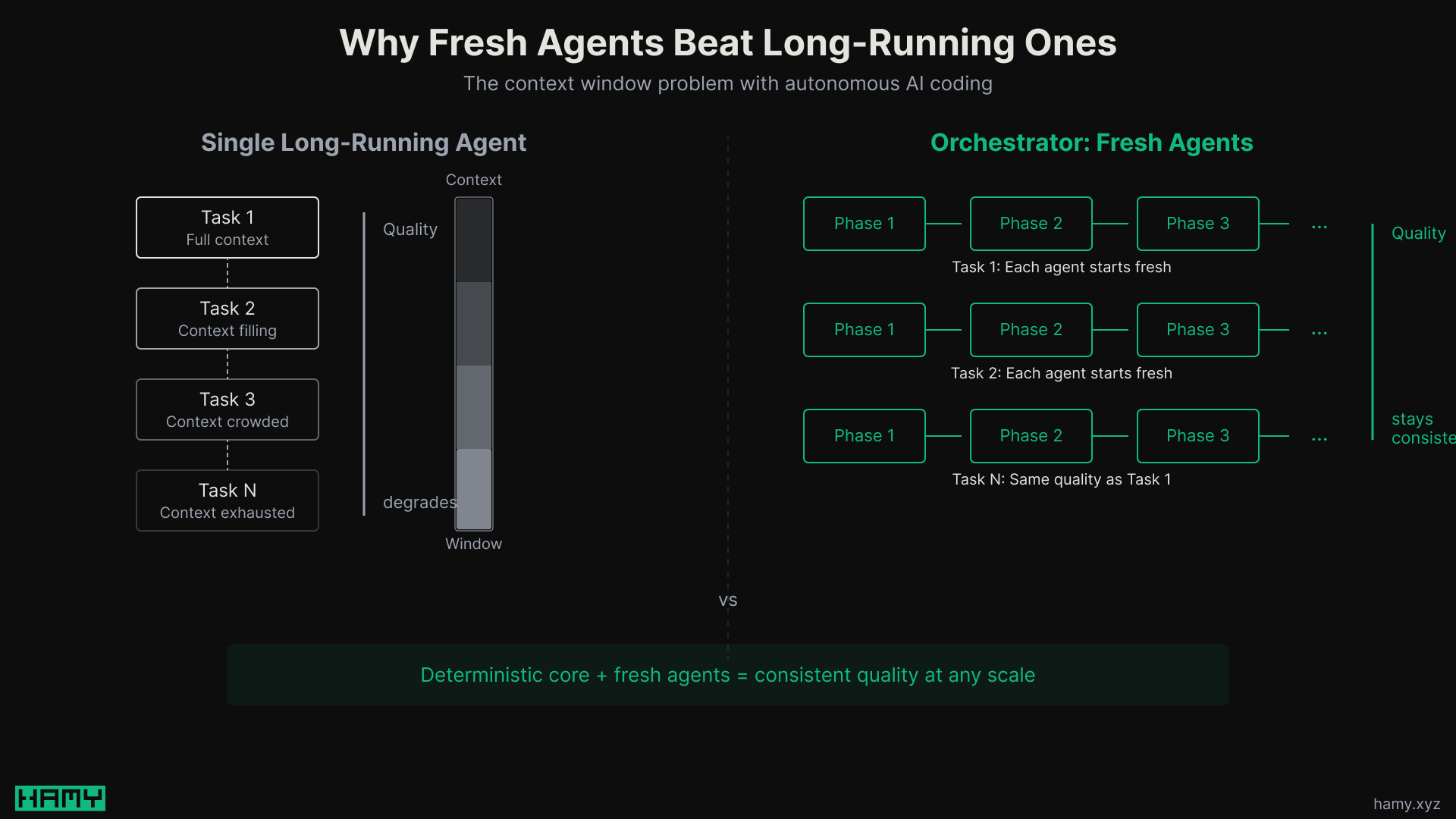

But I noticed an inefficiency - these phases require a human-in-the-loop (HITL) to kick them off one by one. This means that the AI often waits on me until I come back to review / kick off the next phase, even if it doesn't need to. I tried using an AI agent as an orchestrator but it quickly ran out of context / OOMed after just a couple hours of iteration. I also used Ralph loops with an external bash script doing while loops but it proved to be pretty inefficient and would frequently get stuck when a phase was blocked / a piece of work was complete.

So I started to wonder how I could resolve this bottleneck so I can use the flows I like with AI and have it run autonomously while still being in the loop when there were important decisions to be made.

To solve this, I built Phase Golem.

What is Phase Golem?

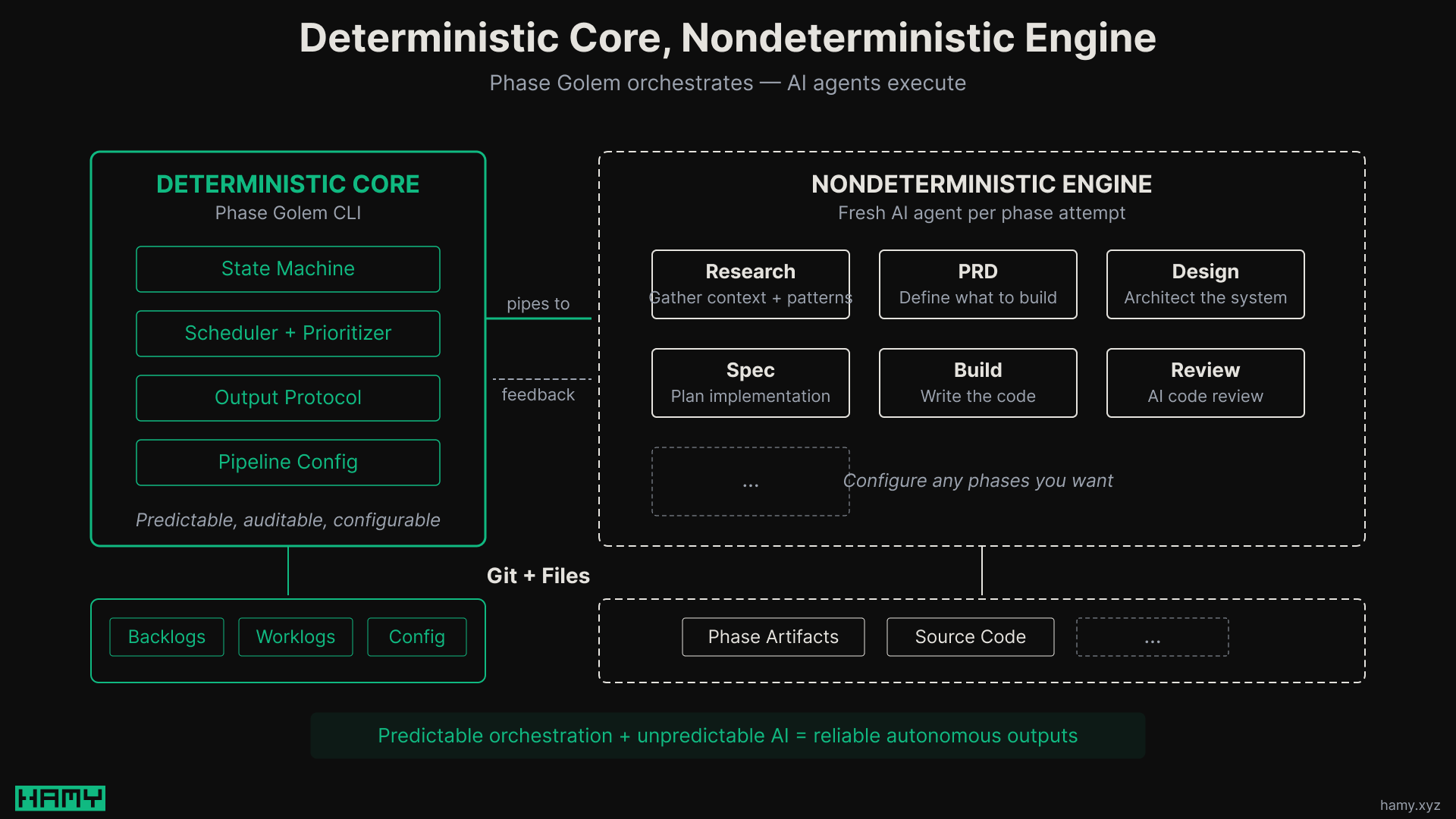

Phase Golem is an AI orchestrator that moves tasks through configurable pipelines. Each pipeline is a phase and pipes out the context of the current work item (in files) to a new agent to do the work.

It's called Phase Golem because it's basically a Golem (an automaton given instructions) that tends to Phases of a pipeline. So Phase Golem. (also I've been really wanting to inject some more fun, fantasy terms into my projects).

Under the hood, it's basically a state machine that:

- Tracks work and what phase they're in

- Prioritizes the work and schedules next work to be done based on configured inputs

- Pipes the work to the underlying AI tool with boilerplate context on where the work's files are located, what the skill / command is for this phase, and what output protocol to use to give feedback to the orchestrator

- Based on the feedback from the agent, will retry / continue / block work / the pipeline

The system is intentionally simple to try and provide a solid deterministic core to support AI's nondeterministic engine.

Other solutions like beads / ccswarm / gastown are far more sophisticated than Phase Golem but they also require more management. Phase Golem tries to keep things simple so that you can configure pipelines how you want them and feel good about leaving it to churn on work for hours on end. We trade off some dynamism and speed to try and gain more consistent outputs.

Things we gain from this approach:

- Auditability in the form of backlog and worklog history in git

- Deterministic configurability of pipeline stages

- No overhead from the orchestrator - it's all just your agents

- Lower agent cost as we don't need multiple agents or an overarching orchestrator to spin things up - it's just a CLI script. (Though you can spin up many subagents as part of your phase instructions if you choose - I do 9 subagents for my Review phase).

- Manage context by spinning up a new agent per attempt, similar to a Ralph loop

- Longevity? It's a glorified state machine so should remain useful even as models and AI CLIs improve.

How is Phase Golem Built?

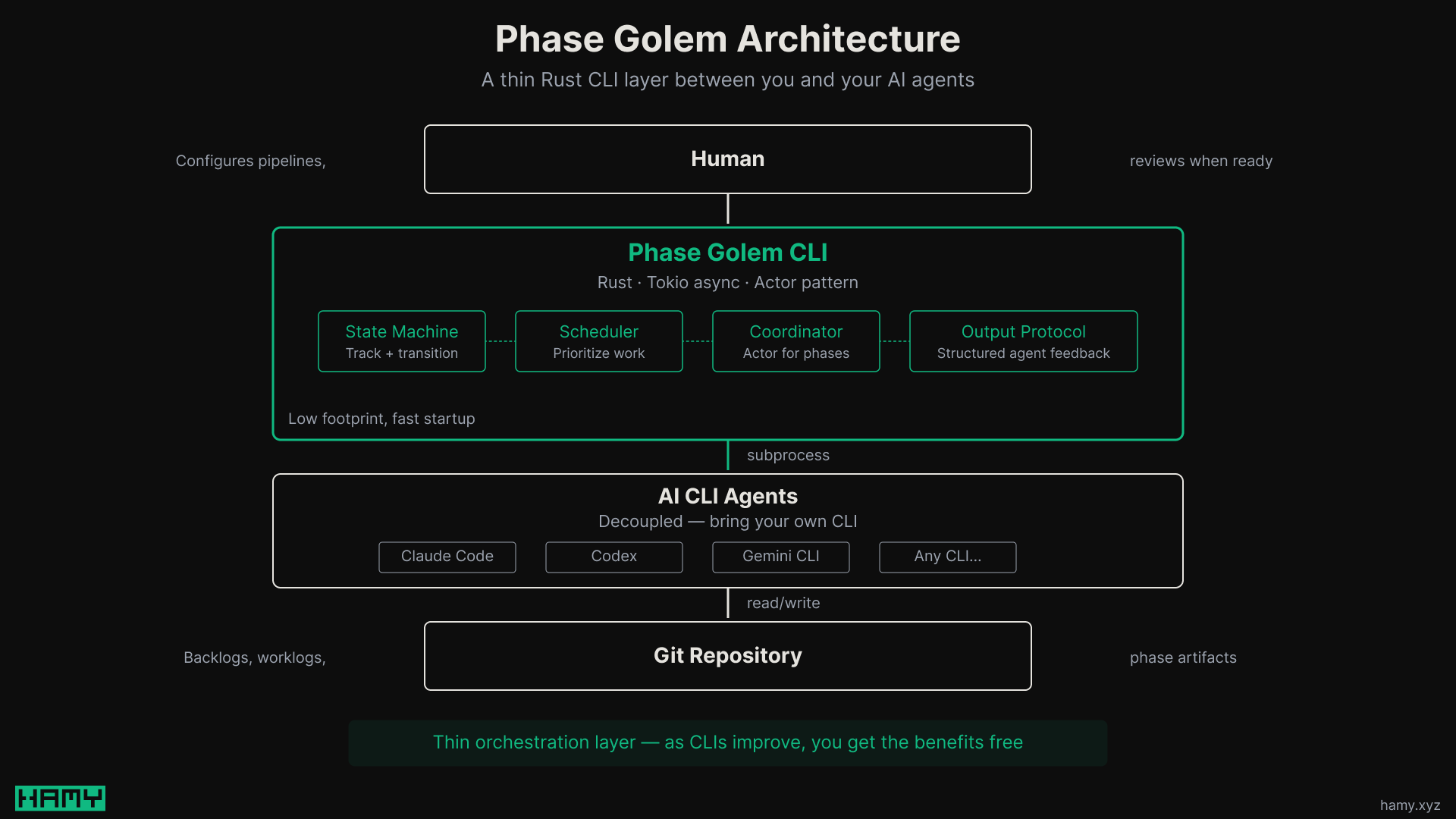

Phase Golem is a Rust CLI. I've been exploring Rust over the past several weeks of my Recurse Batch as a potential candidate for The Missing Programming Language and so far have really enjoyed writing High-Level Rust.

Rust is great for CLIs with low footprint, fast startups, and fast execution along with a solid ecosystem of libraries to make it easy. Of course the fast startup / execution doesn't really mean much as most of the time is just going to be waiting on the AI responses, but still a good tool for a CLI.

Some more design decisions:

- Actors + Async - We use tokio and an actor pattern to avoid race conditions. By default Phase Golem focuses on stable progress over fast potentially instable progress so it limits parallel phases to those marked non-destructive and recommends low parallelism even then. But parallelism is supported and we have a coordinator actor for phases to report to enqueue changes.

- Git version control Git is widely used and solid. It works on files and Phase Golem utilizes files to back its backlogs and phase artifacts. So we use git for version control.

- AI CLIs as sub processes - We decouple the orchestration layer from the actual phase execution. This reduces a lot of complexity, allowing the orchestrator to just be a thin layer over the existing AI CLIs. This means as the CLIs evolve and improve, we get those benefits for free. Plus it allows you to bring your favorite CLI and (eventually) configure them per phase if you wanted to swap them out.

The code itself was largely vibe engineered. I provided direction in the form of product requirements, research, and design and left a good bit of the implementation to AI. I also ran Phase Golem on itself to help me work through a lot of the cleanup tasks that came up as we built.

How does it perform? How should you use it?

It performs decent! Surprisingly well if I'm honest. A lot of this is just that the models have gotten good and I've limited the size / complexity of the tasks I let it take on.

From my recent 10 hour run of it:

- Finished 15 tasks (~1 / 40 mins)

- Cost $90 in tokens

- All tasks small/medium with low/medium size / complexity

So not the fastest or cheapest thing in the world but reasonable change sets and finished a lot of nice to have work I would've had to do myself. Also note that there's a lot of room for cost optimization - this was using Claude Opus 4.6 which is an expensive model, switching to smth like GLM-5 or even Codex would likely drastically reduce that cost.

My current perspective on when tools like this are useful is:

- Set it up with a backlog of tasks that are nice to haves - so you have work scheduled for it to do

- Let it run on small-medium tasks with low-medium risk, complexity - so you can feel pretty confident it can few-shot things and if it messes up there's limited blast radius

- Run it when you step away from the project and want a few tasks done

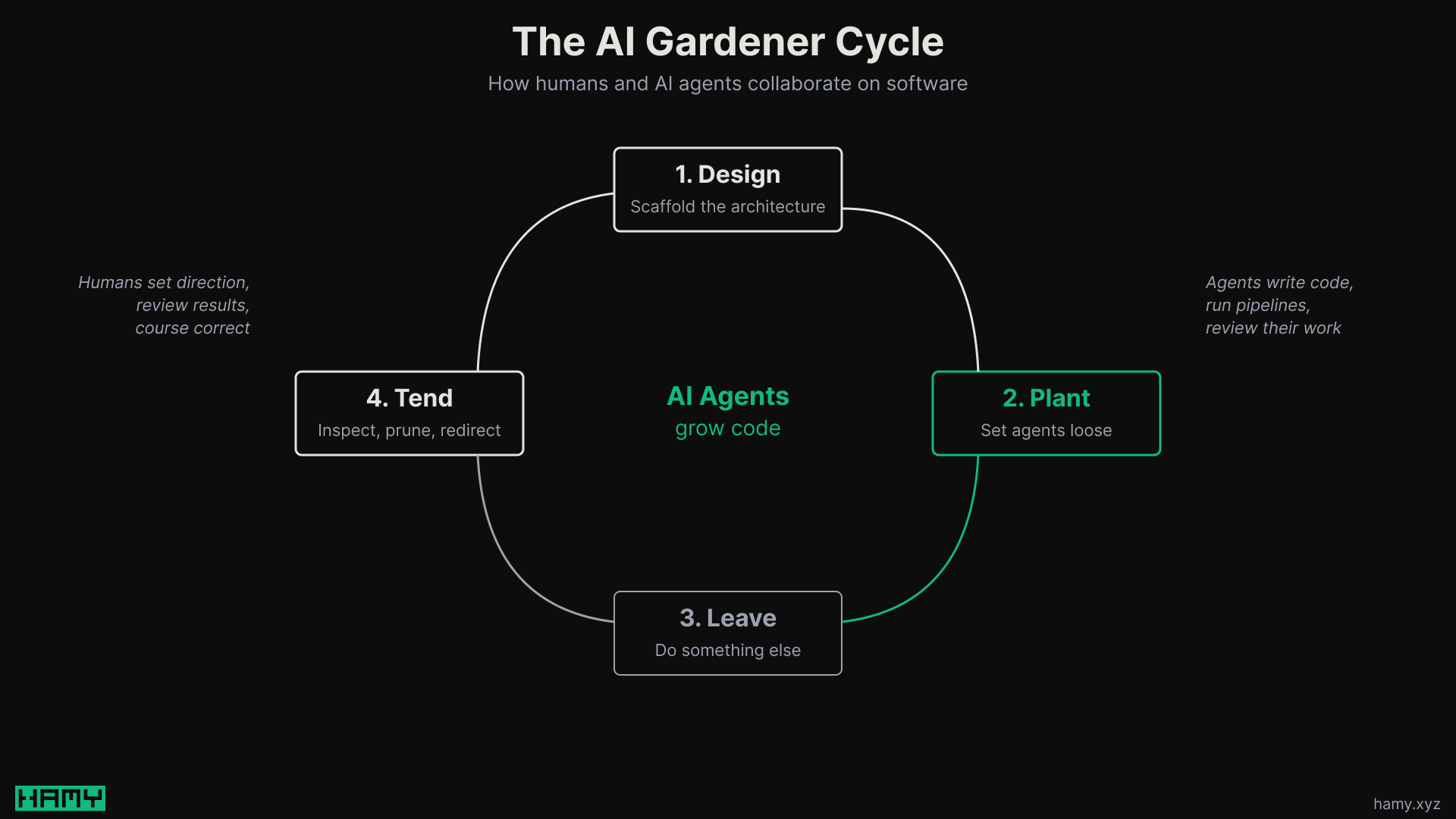

My mental model for how to use it is kind of like a gardener:

- You plant seeds of work

- AI tends / grows those pieces of work through their pipelines

- You come back when you're ready / want to check in on it and review the changes

- Everything is git committed and in files so reverting is easy and we have worklogs to see exactly what's been done and why

Next

Take a look at Phase Golem if you're interested in a simple, local-first AI orchestrator. It's not going to win any awards for speed or capability but I do think it's got a novel and refreshing approach to pipeline configuration. With some tweaks, I think you can get some really good outputs out of it.

I'll be making improvements to this as I run into things personally. If you have feedback, feel free to share it though I'm thinking this will be more of a personal tool I've shared publicly than anything for mass adoption so will prioritize things I personally find useful.

If you want to see the workflows I use for vibe engineering, you can find a snapshot of my .claude folders in the HAMY LABS Example Repo. This repo is available to all HAMINIONs Members and contains dozens of example projects I talk about here on the blog as well as regular snapshots of my AI dotfiles.

If you liked this post you might also like:

Want more like this?

The best way to support my work is to like / comment / share this post on your favorite socials.

Outbound Links

- How I think about writing quality code fast with AI

- I Built an AI Orchestrator and Ran It Overnight - Here's What Happened

- 9 Parallel AI Agents That Review My Code (Claude Code Setup)

- What I Built in my First 6 Weeks at Recurse Center and What's Next (Early Return Statement)

- The Missing Programming Language - Why There's No S-Tier Language (Yet)

- High-Level Rust: Getting 80% of the Benefits with 20% of the Pain

- HAMINIONs - Exclusive access to project code and discounts