Benchmarking my Markdown Blog in Rust and C# - 4.6x Less Memory, 2-8x Faster Latency on the Same App

Essay - Published: 2026.02.27 | 6 min read (1,519 words)

benchmarks | build | create | csharp | rust

DISCLOSURE: If you buy through affiliate links, I may earn a small commission. (disclosures)

I recently rewrote my blog from C# to Rust as a way to further explore High-Level Rust. Both versions serve the same 1,025+ posts from memory using the same architecture: parse all posts at startup, build indices, serve everything from memory. So I thought it was a good opportunity to benchmark them side-by-side.

The result: Rust uses 4.6x less memory and is 2-8x faster on p50 latency across seven endpoints at three concurrency levels for essentially the exact same logic.

Caveat: all benchmarks have asterisks. We'll go through the methodology and limitations so you can judge the results for yourself.

Test Setup

Most Rust vs C# benchmarks compare synthetic hello-world endpoints. This one compares the same real-world application - my markdown blog - running on both stacks with equivalent content and architecture.

- Rust stack: axum 0.7, Maud templates, rayon for startup parsing

- C# stack: ASP.NET Core, CinderBlockHtml (HTML DSL), same in-memory approach

- Endpoints tested:

/,/about,/blog,/blog/2025-reflection,/tags/finance,/tags,/stats - Benchmark tool: custom Rust CLI wrapping hey, measuring full response time through body consumption

- Environment: Docker containers on the same machine (mirrors production), memory measured via

docker statswith 10s post-startup baseline - Load levels: light (5K requests, 10 concurrent), medium (10K, 50 concurrent), heavy (20K, 100 concurrent)

Known limitations:

- Single machine — server and bench tool compete for resources

- Limited warmup for C# (5 requests) — JIT benefits from more, though p50 across 5K+ requests amortizes this

docker statsincludes kernel overhead which inflates both numbers slightly and is imprecise in sampling- Single runs of each benchmark — no statistical averaging across multiple runs

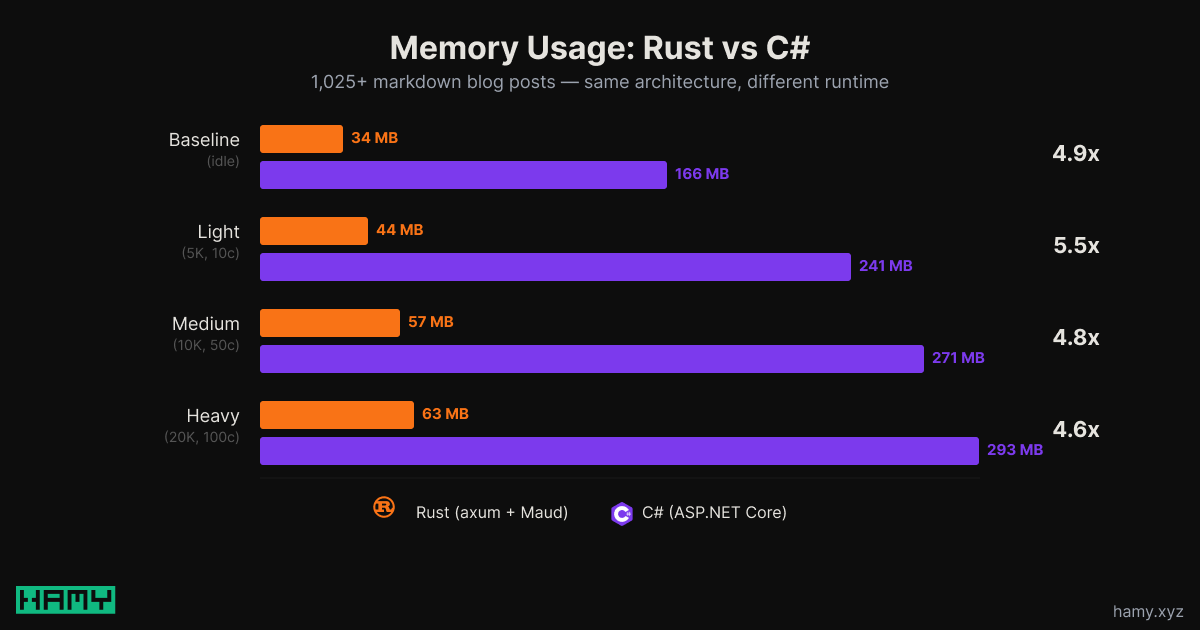

Memory: 4.6x Less in Rust

| Rust | C# | Ratio | |

|---|---|---|---|

| Baseline | 34 MB | 166 MB | 4.9x |

| Light load (5K, 10c) | 44 MB | 241 MB | 5.5x |

| Medium load (10K, 50c) | 57 MB | 271 MB | 4.8x |

| Heavy load (20K, 100c) | 63 MB | 293 MB | 4.6x |

Rust with all 1,025+ posts loaded in memory uses almost 1/5th the RAM that C# uses just to start up with nothing loaded.

Why C# uses more: .NET has significant runtime overhead from its garbage collector, JIT compiler, and type system metadata. Microsoft's docs show ~50MB for a Hello World ASP.NET Core app so our 166MB baseline with 1,025 posts loaded is reasonable.

Why Rust's memory grows under load: This is heap fragmentation, not a memory leak. It's well-documented in the tokio ecosystem — it's the same "stair-step" pattern Svix saw in production. I verified by running the heavy load benchmark 3x back-to-back without restarting: 63MB, 71MB, 67MB. The plateau signals no leak (we'd expect ~linear growth with a leak).

Latency: 2-8x Faster in Rust

Here are the results under heavy load (20K requests, 100 concurrent):

| Endpoint | Rust P50 | C# P50 | Speedup | Rust Req/s | C# Req/s | Req/s Speedup |

|---|---|---|---|---|---|---|

/ |

444µs | 2.17ms | 4.9x | 1,283 | 388 | 3.3x |

/about |

406µs | 1.65ms | 4.1x | 2,058 | 575 | 3.6x |

/blog |

811µs | 6.83ms | 8.4x | 1,063 | 139 | 7.7x |

/blog/slug |

599µs | 2.65ms | 4.4x | 1,411 | 361 | 3.9x |

/tags/finance |

508µs | 1.04ms | 2.0x | 1,690 | 445 | 3.8x |

/tags |

1.73ms | 10.84ms | 6.3x | 535 | 82 | 6.5x |

/stats |

533µs | 1.83ms | 3.4x | 1,601 | 498 | 3.2x |

A few patterns stand out:

Heavier pages show bigger gaps. /blog (listing all posts) hits 8.4x, /tags (listing all tags with post counts) hits 6.3x. These pages render the most HTML, so the advantage of Maud's compile-time template generation compounds.

Simple pages still show 3-4x. Even /about and individual blog posts — relatively lightweight pages — are consistently 3-4x faster.

The gap widens with concurrency. /blog goes from 4.1x at 10 concurrent to 5.6x at 50 to 8.4x at 100. This is consistent with GC pressure - as concurrency increases, the garbage collector has more work to do, and the occasional GC pause shows up more in p50 numbers. Rust's tokio runtime has no garbage collector; it only yields at .await points, so there are no stop-the-world pauses (though stop the world starvation / deadlocks are still possible and arguably more likely in a language like Rust).

Results at Other Load Levels

For completeness, here are the light and medium load results:

Light load (5K requests, 10 concurrent):

| Endpoint | Rust P50 | C# P50 | Speedup |

|---|---|---|---|

/ |

101µs | 345µs | 3.4x |

/about |

118µs | 245µs | 2.1x |

/blog |

222µs | 906µs | 4.1x |

/blog/slug |

160µs | 361µs | 2.3x |

/tags/finance |

144µs | 346µs | 2.4x |

/tags |

475µs | 2.35ms | 4.9x |

Medium load (10K requests, 50 concurrent):

| Endpoint | Rust P50 | C# P50 | Speedup |

|---|---|---|---|

/ |

237µs | 1.48ms | 6.2x |

/about |

287µs | 815µs | 2.8x |

/blog |

541µs | 3.03ms | 5.6x |

/blog/slug |

364µs | 1.27ms | 3.5x |

/tags/finance |

344µs | 1.18ms | 3.4x |

/tags |

1.09ms | 9.14ms | 8.4x |

The Outlier: /stats Was 0.9x (C# Won)

In the initial benchmark, /stats was the one endpoint where C# was slightly faster — 0.9x. Here were the initial heavy load results:

| Endpoint | Rust P50 | C# P50 | Speedup |

|---|---|---|---|

/stats |

2.07ms | 1.83ms | 0.9x (C# wins) |

The root cause wasn't a language difference but an implementation gap. The C# version pre-computed all stats at startup (post counts, word totals, yearly groupings) then renders the HTML on each request. The Rust version was recomputing everything per request: 4 full scans of all 1,025 posts, HashMap grouping by year, and tag counting with visibility filtering.

After fixing the Rust version to pre-compute stats at startup (matching the C# approach):

| Metric | Before Fix | After Fix |

|---|---|---|

| Rust P50 | 2.07ms | 533µs (3.9x faster) |

| vs C# P50 | 0.9x (C# faster) | 3.4x (Rust faster) |

| Rust Req/s | 453 | 1,601 (3.5x more throughput) |

So the 0.9x outlier was a missing optimization that the C# version already had. Once the implementations matched, /stats showed the same 3-4x Rust advantage as every other endpoint.

Does Any of This Matter?

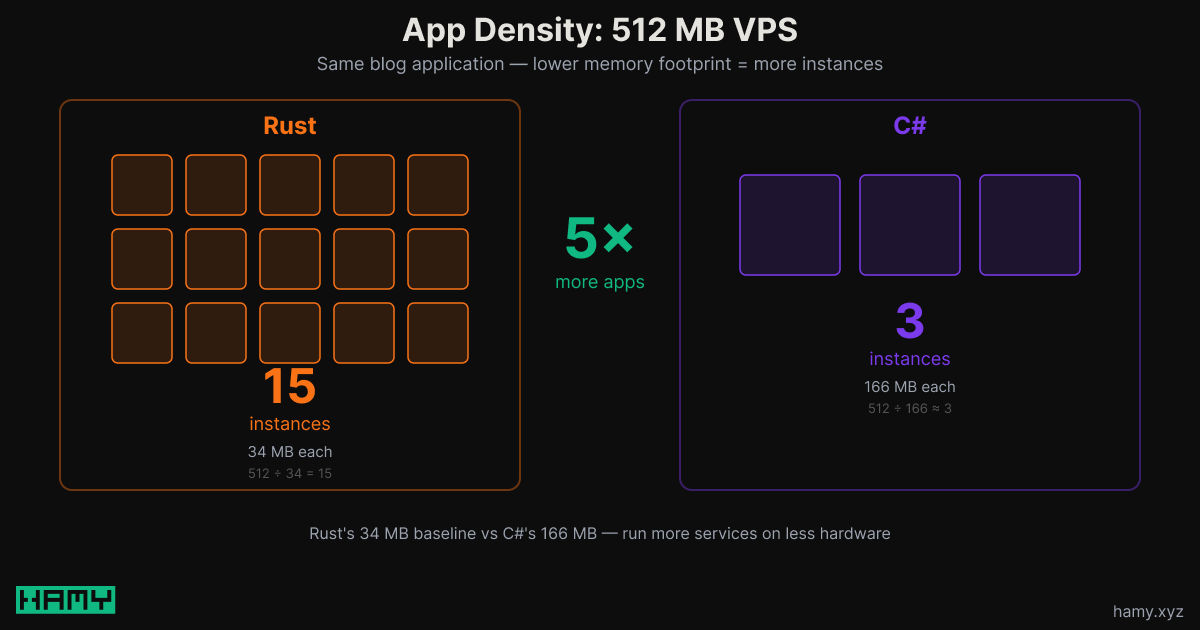

For a personal blog? Not really. Sub-millisecond vs low-millisecond latency is imperceptible to users. Nobody is hitting my blog with 100 concurrent requests and the network latency from my Hetzner box in Finland to US East (~100ms) drowns out any gains from faster rendering.

Where it does matter:

- Hosting costs. 34MB vs 166MB baseline means running more apps comfortably on smaller instances.

- Tail latency at scale. The p99 gap is larger than the p50 results we showed here due to GC pauses, so at scale this can have a larger impact on your e2e latency.

- Container density. In Kubernetes, benchmarks show Rust at ~9MB vs C# at ~200MB — that's 22x more containers per node.

A few counter-arguments worth acknowledging:

- .NET is getting better. .NET continues to improve with features like DATAS server GC and AOT reducing memory footprint and impressive yearly performance improvements so I'd expect the memory / latency gap to shrink in the coming years.

- Developer productivity. C# is still faster to write for most teams as Rust's borrow checker has an infamous learning curve. Techniques like High-Level Rust and AI can help but don't fully eliminate it.

Next

Rust is 2-8x faster and uses 4.6x less memory than C# for running my markdown blog. The advantage is largest on heavy pages and under high concurrency.

But performance isn't the only reason to choose a language. I wrote about why I use high-level Rust — the performance is a bonus on top of the type system and ownership model. And with agentic AI engineering, the productivity gap is narrowing.

If you're considering Rust for a web project, the performance story is real and I personally think the learning curve can be vastly improved with the right approach. I'm building CloudSeed - a fullstack Rust boilerplate - using this paradigm to help improve devx and ship speed. You can get it now for an early access discount for a limited time.

If you liked this post you might also like:

Want more like this?

The best way to support my work is to like / comment / share this post on your favorite socials.

Inbound Links

Outbound Links

- High-Level Rust: Getting 80% of the Benefits with 20% of the Pain

- Your Programming Language Benchmark is Wrong

- How I self-host with Ansible - Multi-server Container Deployments with Nomad

- The Missing Programming Language - Why There's No S-Tier Language (Yet)

- How I think about writing quality code fast with AI

- The Problem with Clones in Rust - Why Functional Rust is Slower Than You Think (And How to Fix It)