How One Million Checkboxes was Built (Tech Stack and System Design)

Essay - Published: 2025.02.05 | 3 min read (921 words)

build | create | golang | one-million-checkboxes | python | redis

DISCLOSURE: If you buy through affiliate links, I may earn a small commission. (disclosures)

One Million Checkboxes was a website that allowed the internet to check/uncheck global checkboxes. It was a hit for the few weeks it was alive and inspired me to create my own version with HTMX.

OMCB's creator Nolen Royalty (aka eieio) recently did a talk explaining how and why he built it. Naturally I was curious and wanted to compare notes so in this post we'll summarize how OMCB worked - its tech stack, system design, and things we can learn from it.

How One Million Checkboxes Works

At a high level:

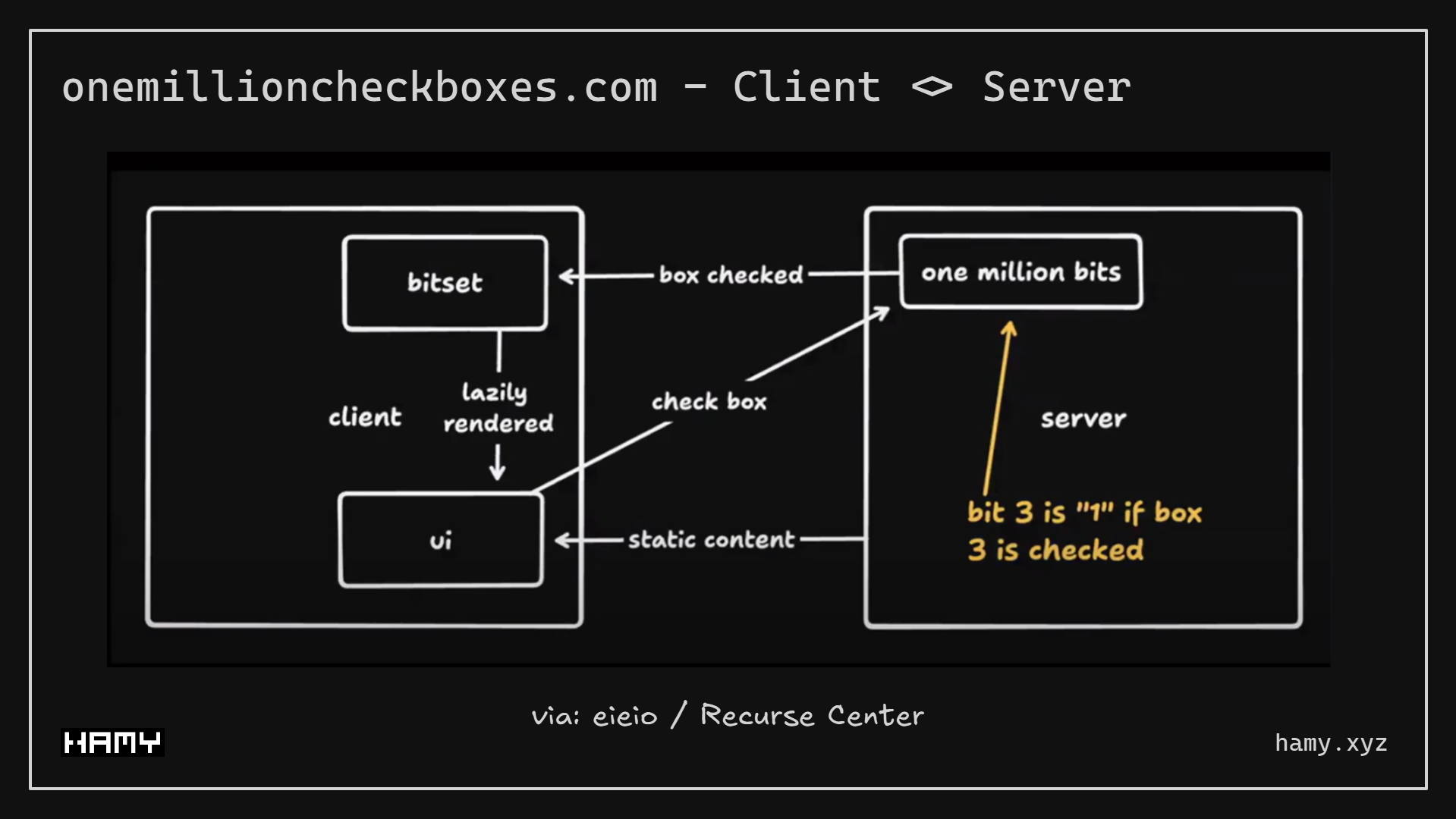

- A user comes to the website and a websocket is opened between client - server

- gets a full state of ALL checkboxes in a form similar to:

[[CHECKED], [UNCHECKED]]where CHECKED / UNCHECKED are the indices of those boxes - As boxes are checked / unchecked - it receives additional state updates (sometimes deltas, sometimes full refresh) in that same form

- gets a full state of ALL checkboxes in a form similar to:

- A user checks a box, this is sent to the server via websocket

- Change is batched to Redis

- Redis emits a delta

- Servers get delta and push it to attached clients

While the client only renders the checkboxes visible in the browser window, the client still receives ALL updates from the server. The creator says this is to make it feel more "alive" - like if you scroll very quickly there's no load, it's already there and moving.

One Million Checkboxes - Tech Stack

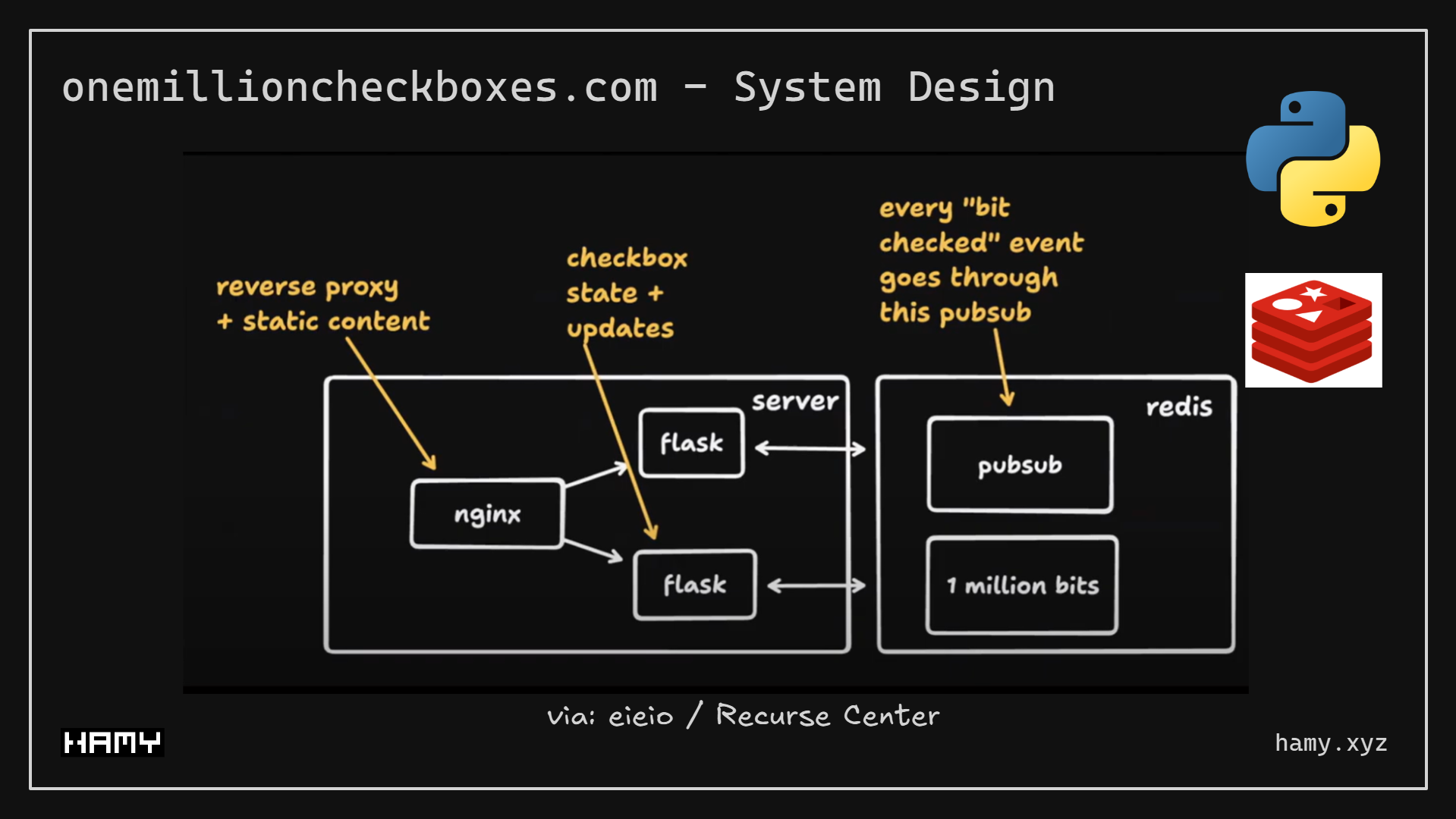

OMCB was hosted on Digital Ocean droplets with a managed DO Redis instance.

- Frontend: React with React Window for selectively rendering viewport

- Backend: Python Flask, horizontally scaled with Nginx as router / load balancer

- Database: Redis, managed by Digital Ocean

- Hosting: ~8 8GB RAM / 50GB Disk servers (Digital Ocean Droplets) running Ubuntu 24.04

Other interesting highlights

Some interesting stats:

- Total Boxes Checked: 650 million

- Total cost to run: ~$800

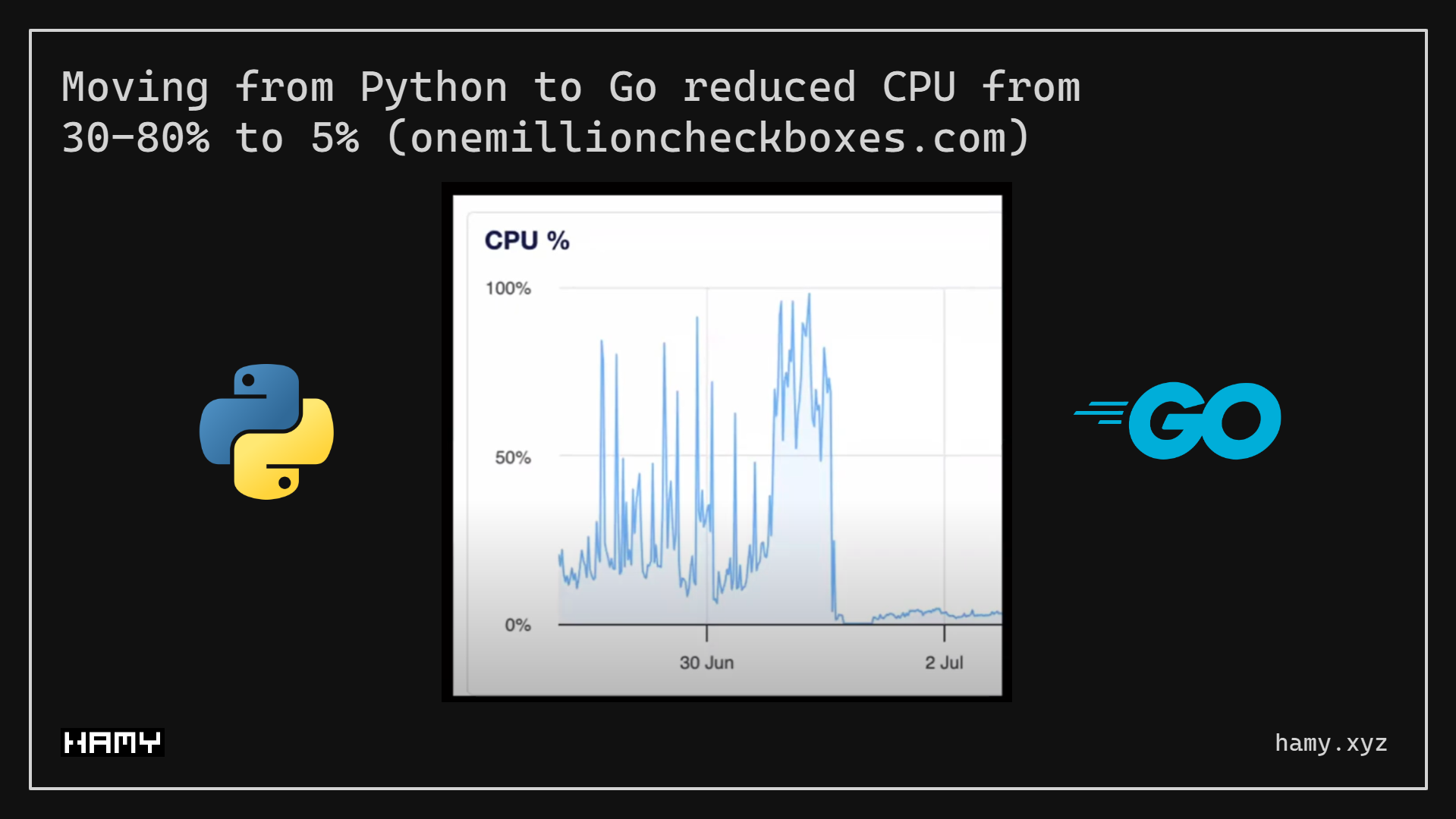

Python to Go

The backend started as Python Flask but was later migrated to Go. Go was substantially (~20x) faster so starting with Go may have saved many of the required optimizations / horizontal scale. At 20x faster this may have severely cut down the total server cost, perhaps saving several hundred dollars.

Hosting Costs

Server cost is likely not the only major contributor to this cost - bandwidth was a major concern given the large amount of concurrent clients and how much data was passing between them (every checked box triggers a data emission to ALL clients).

While Digital Ocean is relatively cheap for data egress, it's not the cheapest. Going with a different cloudhost like Hetzner or OVH could've saved 90% or 100% on bandwidth costs respectively.

By the same token, a 4 vCPU / 8GB RAM server at DO costs about $48 while a similar server can be found cheaper elsewhere - ~$18 on Hetzner.

I know this project is just for "fun" but cost can be a very limiting factor, especially if you're not making money on the project. This is one of the reasons I'm personally self-hosting many of my projects with Coolify, to cap and reduce ongoing costs so I can build more.

Websockets

Websockets are cool and definitely are a way to achieve real-time communication over long distances. But I'm still not convinced they are good at scale.

The issue lies in fan out. One update leads to n fanout. For apps that can handle this load, it's great but for those that can't - it's either updating slowly (not real-time) or dropping data (not syncing at all).

In OMCB's case it seemed to be doing both - capping max bandwidth (losing data) and having potential out-of-sync updates requiring full pushes every now and then.

If both scalability of bw usage and ability to perform real-time updates becomes a problem - Websockets may not be the right answer.

This is one of the reasons I decided to go with polling for my version of One Million Checkboxes - it's highly cacheable, client can ask for just what it needs, no wasted bw sending data clients don't need. Now there are downsides - it doesn't update as fast (latency in seconds) and it does waste bw sending boxes that haven't changed but generally I'm okay with the tradeoffs.

That said - I think much of the websocket scalability issue here comes from sending all updates to all clients. If it was capped at just the boxes (and maybe some buffer on each side) the client was looking at it seems like this could cut down on bandwidth / updates by ~99%.

Next

I've really enjoyed playing with OMCB and learning about how it was put together. If you're interested in more background, I would highly recommend eieio's OMCB talk as it touches on a bunch more stuff including why they built it, game theory, and some fun stories along the way.

I for one am looking forward to the next eieio game to play (and maybe copy).

If you liked this post you might also like:

Want more like this?

The best way to support my work is to like / comment / share this post on your favorite socials.