Building a Fullstack Web App with SvelteKit and F# (SMASH_THE_BUTTON)

Essay - Published: 2022.11.02 | 7 min read (1,759 words)

fsharp | projects | smash-the-button | sveltekit

DISCLOSURE: If you buy through affiliate links, I may earn a small commission. (disclosures)

Overview

In this post we'll dive into building a full-stack web app using SvelteKit and F#, using SMASH_THE_BUTTON as an example.

SMASH_THE_BUTTON

SMASH_THE_BUTTON is a clicker website that allows you to increment a counter.

The premise is:

- User: Clicks button

- Website: Counts clicks and displays total

My goals for the site were to:

- Learn F#

- Learn Functional Programming

- Decide if I wanted to continue using the language

I learn best via doing, so I built this site to test many different parts of the language and paradigm.

Workflows

Before building / designing any kind of software I think it's useful to first understand what we're trying to accomplish - this is usually best done from the users's standpoint as most software aims to solve some sort of user problem. For this we'll use a rough approximation of the JobsToBeDone framework.

- Customer: Bored people on the internet

- Problem: Trying not to be bored

- Requirements:

- P0

- Site needs to "work"

- Low barrier to entry - needs to be super easy to use

- P1

- Correctness, Consistency - kinda important but not really to this user

- P0

With a basic understanding of the customers and what they're trying to accomplish, we can move forward to the Workflows we can build to support them.

For this we really only need two workflows:

- PushTheButton

- Log Customers' button push

- Persist push counter increment

- ReadButtonPushes

- Get the latest push count from Persistence

- Return to Frontend

Architecture

Most software projects have very similar core requirements and thus typically have similar core components:

- Frontend - for end users to interact with

- Backend - for business logic and processing

- Persistence - for accessing / storing data

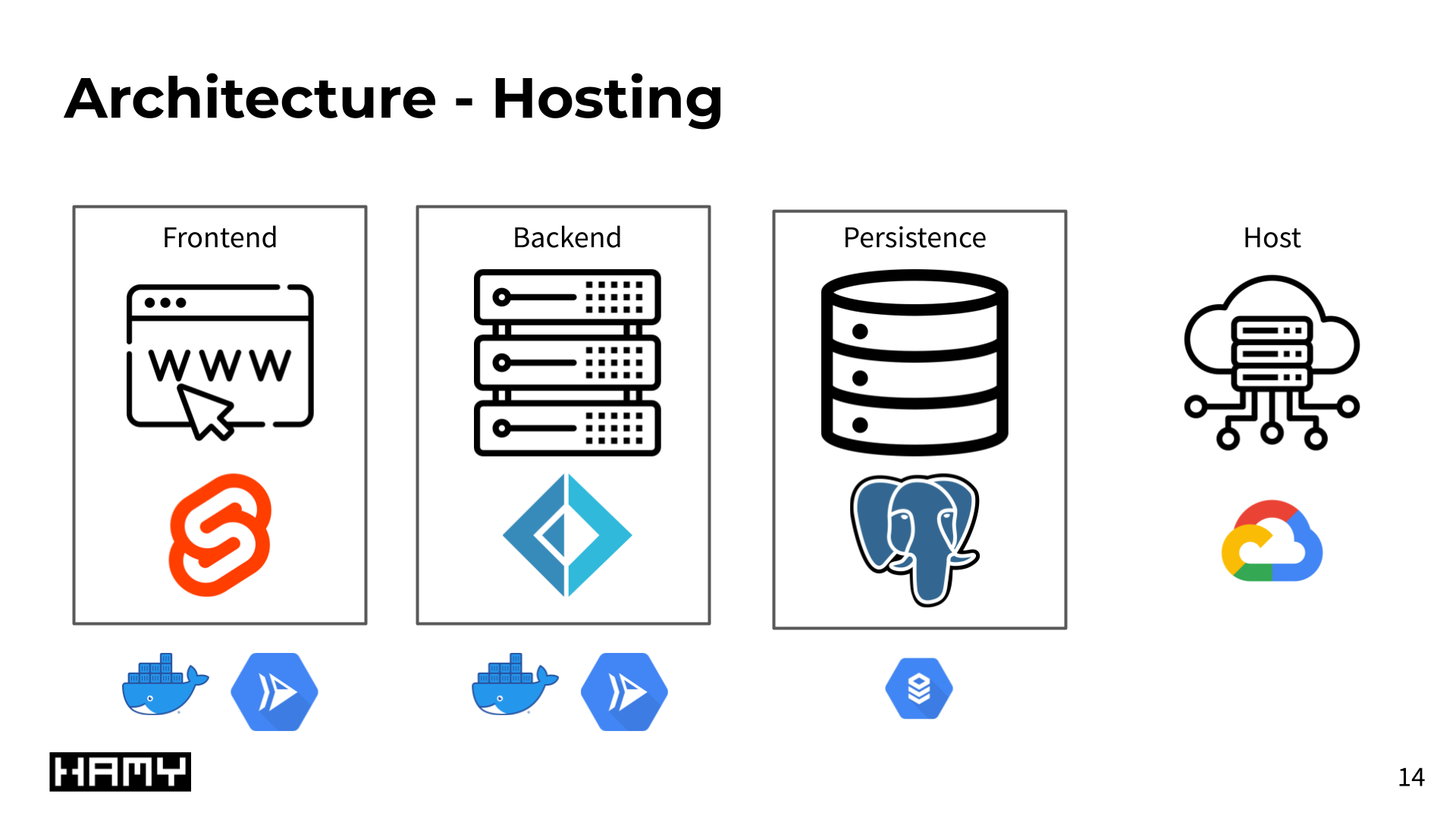

For this project, I decided to use a few familiar tools along with F# to speed things up:

- Frontend - Svelte / SvelteKit

- Backend - F# with Giraffe on .NET

- Persistence - Postgres

For hosting I again went with Google Cloud, using CloudSQL to get a cheap, managed Postgres instance and Cloud Run to host and serve my Frontend and Backend Docker containers.

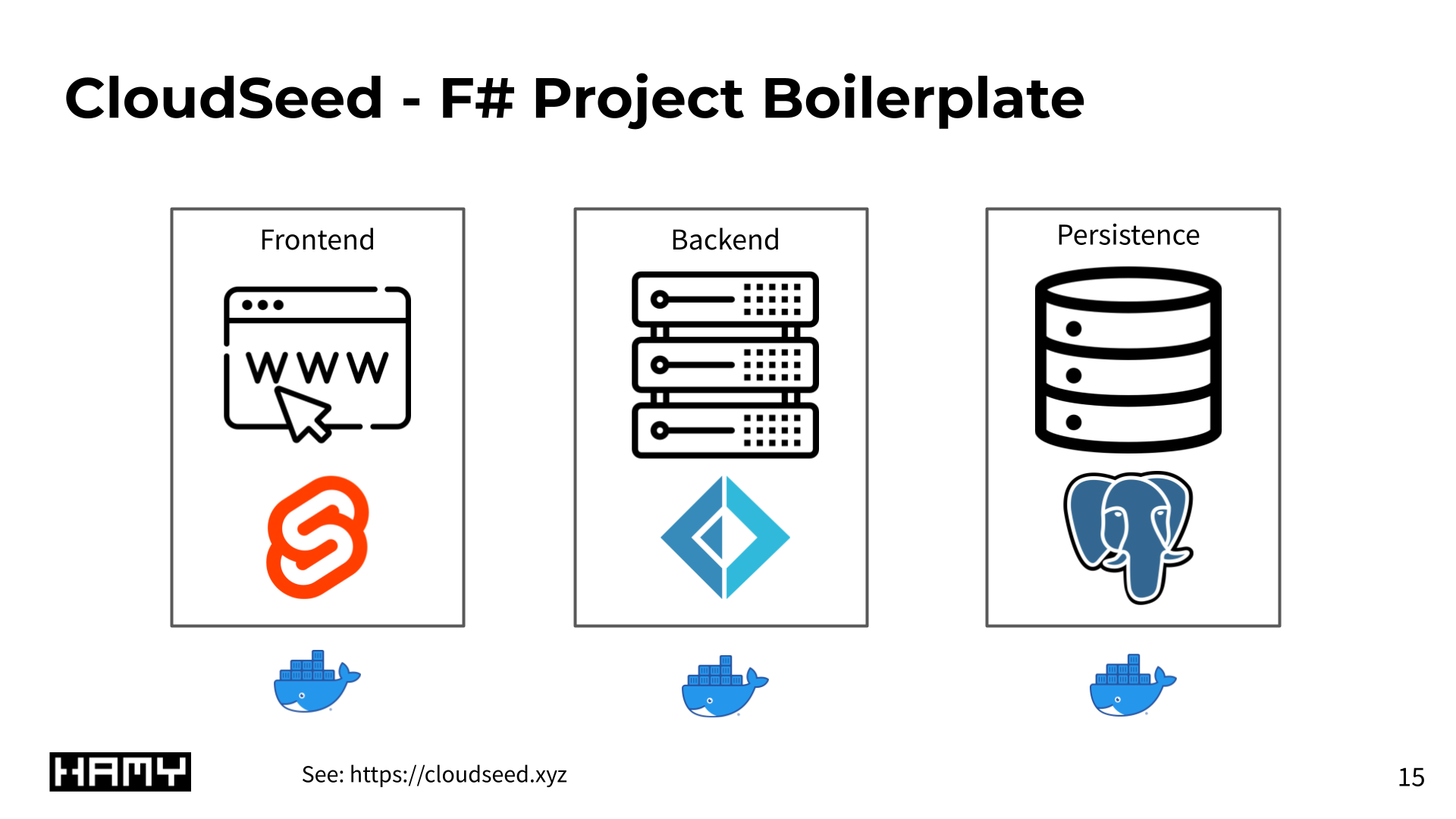

If you've been following along for awhile, you'll probably recognize this architecture - it's a similar architecture to how I build and run most projects. I packaged these technologies together into a fully dockerized project boilerplate called CloudSeed to make it faster to build production-ready apps. Get the full CloudSeed boilerplate.

F# Backend technologies

I've been following r/fsharp for awhile and there's a lot of questions about technologies to use to build a decent, real-world F# web server. For this project I went with:

- Web Server: Giraffe on ASP.NET

- Data Layer:

- Testing: XUnit (with FsUnit)

F# and Functional Programming excels at being super simple by removing any and all "magic". I wanted my tech stack to continue with that philosophy so everything here is battle-tested and with the smallest abstractions possible while still remaining ergonomic.

For more info: Build a simple F# web API with Giraffe

Building for Scale

From the Workflows and Architecture, it's pretty easy to see how all this goes together. That said, there were a few areas I found interesting scalability wise that I think are worth doing a shallow dive into.

When we consider the Workflows we're building, there's two obvious bottlenecks:

- PushTheButton - Writes

- Concurrent Updates from many sources

- Issues:

- Correctness - ensuring atomic increments

- DB load via concurrent IO

- ReadButtonPushes - Reads

- Concurrent Reads

- Issues:

- Correctness - ensuring we're reading up-to-date values

- DB load via concurrent IO

While most projects never really reach a scale where any of these optimizations are necessary, I still think they're useful thought exercises to ensure whatever design you come up with could reasonably scale if it became an issue in the future. Moreover since my primary goals for building this were to learn F# and Functional Programming - I took this as a challenge to discover how I might solve these common problems functionally.

Bottleneck - Writes

For the write bottlenecks, we can use some common patterns to deal with load:

- Frontend

- Batch clicks in browser

- Count clicks locally

- Send on time interval

- -> Static load over time / client

- Less load on Backend, less QPS to Persistence

- Configurable to tradeoff latency vs scalability

- Batch clicks in browser

- Backend

- Batch updates on server

- In-memory queue with F# MailboxProcessor

- Combine similar update messages into single operation

- Flush to Persistence

- -> Less load on Persistence, factor of

n / batchSize- Configurable to tradeoff latency vs scalability

- Batch updates on server

Bottleneck - Writes - Frontend

Batching clicks in SvelteKit is pretty simple. Basically we have:

- A function that sends all unsentClicks on an interval (currently 2s)

- When you click a button, we increment unsentClicks

Button code:

<button

on:click={() => {

localCount += 1

unsavedLocalCount += 1}}

type="button">

SMASH

</button>

Script code enabling interval click sends:

let remoteCount = 0

let localCount = 0

let unsavedLocalCount = 0

let saveButtonPushes = () => {

if(unsavedLocalCount === 0) {

return

}

sendButtonPushesCommandAsync({

hits: unsavedLocalCount

})

unsavedLocalCount = 0

}

let saveButtonPushesInterval = setInterval(

saveButtonPushes,

2000

)

Bottleneck - Writes - Backend

The frontend code tries to prevent any one client from sending too many writes but there are still cases where we could end up with a flood of traffic on the backend - like there are a lot of clients at the same time or someone decides to hit the APIs directly. For this, I decided to try out F#'s built-in agent system to build a simple in-memory queue.

- F# MailboxProcessor to queue up click writes

- When batch is full / no more messages -> combine into a single write operation and flush to DB

BatchWriter:

module BatchWriter =

let createBatchWriter<'TMessage> (batchSize : int) (writeFnAsync : (list<'TMessage>) -> Async<unit>) =

let agent = MailboxProcessor.Start(fun inbox ->

let rec loop (messageList : list<'TMessage>) =

async {

let! message = inbox.Receive()

let newMessageList = message::messageList

match newMessageList with

| newMessageList when (newMessageList.Length >= batchSize

|| inbox.CurrentQueueLength = 0) ->

do! writeFnAsync newMessageList

return! loop []

| _ -> return! loop newMessageList

}

loop [])

fun (message : 'TMessage) ->

agent.Post message

This is basically a batchWriter "factory" that utilizes a closure to allow you to create a new queue each time.

It can be used like this:

let myBatchWriter = createBatchWriter batchSize myBatcherFunction

Note that this queue is in-memory so if something bad happens we'll lose messages. But that doesn't really matter for this project and should be able to easily handle a queue of >50k messages easily.

Bottleneck - Reads

The final bottlenecks to talk about are on the read side. One important thing for this project is for users to know that the counter is actually updating. We do a lot of this work locally but we still need to ask the server periodically about what the global count is so we can give a better clicking experience.

For reads I also took a two-pronged approach:

- Frontend

- Only read on cadence

- Backend

- Cache global read results

Bottleneck - Reads - Frontend

One of the major ways to increase efficiency in a system is to do less work. Since all work in our system originates from a client, we can tell the client to do less.

- Read global value from Backend, but only ask every 5s

I like the polling approach because it's simple and it's very configurable if we actually start having scale problems.

On SvelteKit frontend:

let remoteCount = 0

let localCount = 0

let unsavedLocalCount = 0

$: totalCount = remoteCount + localCount

let fetchRemotePushes = () => {

getRemotePushesQueryAsync()

.then(totalRemotePushes => {

remoteCount = totalRemotePushes.payload ?? 0

})

}

onMount(async () => fetchRemotePushes())

let fetchTotalPushesInterval = setInterval(

fetchRemotePushes,

5000

)

Bottleneck - Reads - Backend

Of course we still have the problem where if we get a ton of concurrent clients / people hit our backend APIs directly we could overload our IO bottlenecks. The common solution to this is caching.

- Cache global counter read for 2s

I took this as an opportunity to try my hand at writing a simple cache myself. It's got a lot of shortcomings:

- Garbage Collection is lossy / random

- Potential for thundering herd on cache eviction

- Probably not optimally performant

- Potential for stale memory holdings

So probably would recommend most people use the official FSharp.Data.Runtime.Caching implementation.

SimpleTimedMemoryCache

module SimpleTimedMemoryCache =

type TimeCachedItem<'Item> = {

Item: 'Item

ExpiryTimeEpochMs: int64 }

let createTimeBasedCacheAsync<'TCache> (timeWindowMs : int64) (totalItemCapacity : int): (int64 -> string -> Async<'TCache> -> Async<'TCache>) =

let cachedItemLookup = new ConcurrentDictionary<string, TimeCachedItem<'TCache>>()

let trashCollect (cachedItemLookup : ConcurrentDictionary<string, TimeCachedItem<'TCache>>) (countToRemove : int) : unit =

match cachedItemLookup.Count with

| count when count > totalItemCapacity ->

cachedItemLookup.Keys

|> Seq.truncate countToRemove

|> Seq.toList

|> Seq.iter (fun k ->

cachedItemLookup.TryRemove(k) |> ignore)

|> ignore

| _ -> ()

let fetchFromCacheOrFetcherAsync (currentTimeMs : int64) cacheId (fetcherFnAsync : Async<'TCache>) : Async<'TCache> =

async {

let existingCachedItem =

match cachedItemLookup.TryGetValue(cacheId) with

| true, item ->

match item with

| i when item.ExpiryTimeEpochMs > currentTimeMs ->

Some item

| _ -> None

| _ -> None

match existingCachedItem with

| None ->

let! newItem = fetcherFnAsync

let newCachedItem = {

Item = newItem

ExpiryTimeEpochMs = currentTimeMs + timeWindowMs

}

cachedItemLookup[cacheId] <- newCachedItem

trashCollect cachedItemLookup 2

return newItem

| Some(cachedItem) ->

return cachedItem.Item}

fun (currentTimeMs : int64) cacheId (fetcherFnAsync : Async<'TCache>) ->

async {

return! fetchFromCacheOrFetcherAsync currentTimeMs cacheId fetcherFnAsync

}

let createTimeBasedMemoAsync<'TMemo> (timeWindowMs : int64) =

let placeholderCacheKey = "_memo_key"

let cacheFunction = createTimeBasedCacheAsync<'TMemo> timeWindowMs 1

fun (currentTimeMs : int64) (fetcherFnAsync : Async<'TMemo>) ->

cacheFunction currentTimeMs placeholderCacheKey fetcherFnAsync

Theoretically this limits IO for reads to 1 every 2 seconds per app instance. In practice, we actually end up with some thundering herd possibilities due to concurrency race conditions when multiple requests hit an evicted cache item.

But still does the job.

Next Steps

That's it - my whirlwind tour of how I built a fullstack website with SvelteKit and F#.

- Give SMASH_THE_BUTTON a few clicks

- Get started with F# and Docker

Want more like this?

The best way to support my work is to like / comment / share this post on your favorite socials.